GPT-4 Opinion on Tensors of Meaning

14.05.2023

Definition of the term "Meaning Tensor"

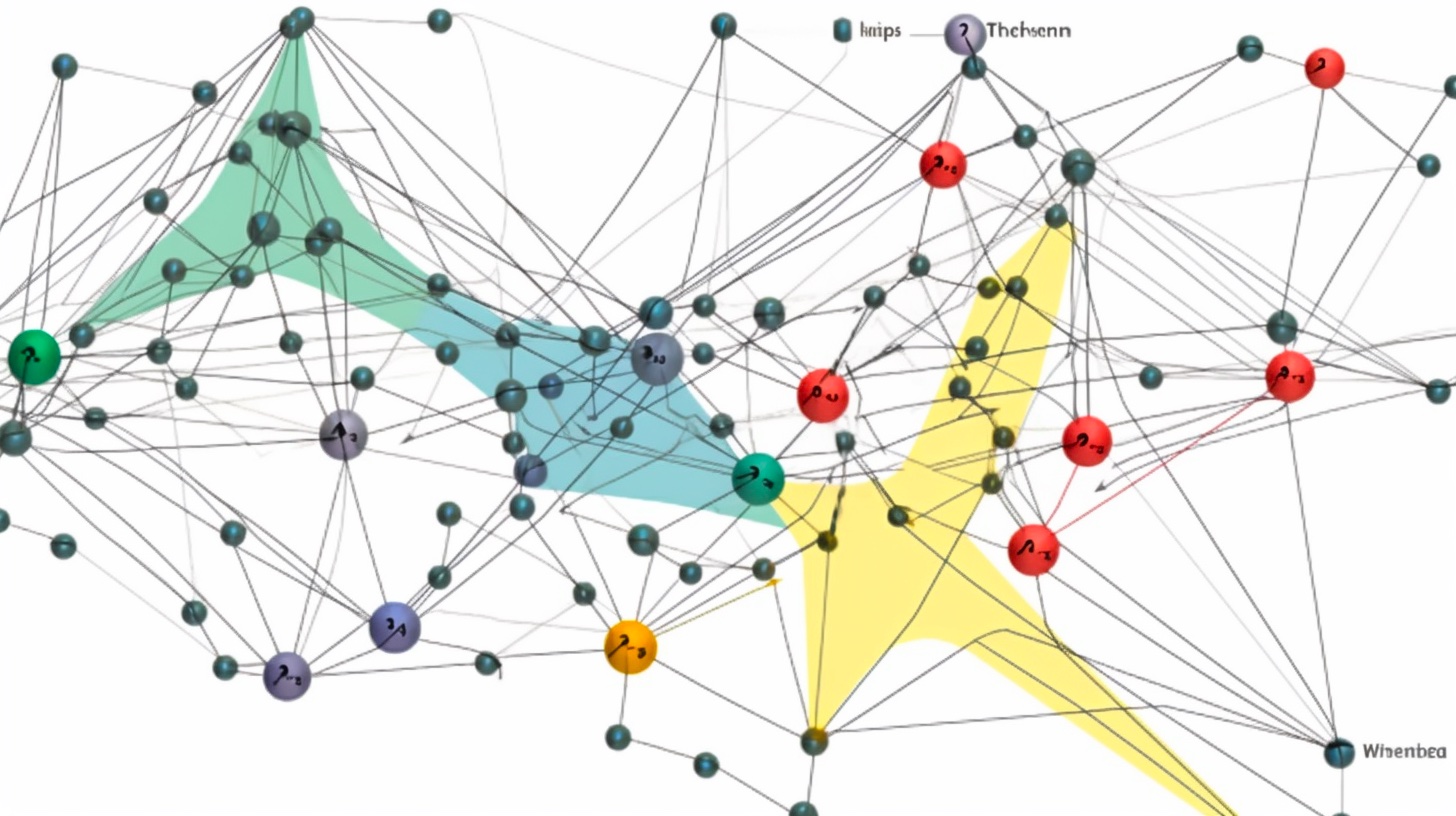

I: The meaning tensor is the space of degrees of freedom of any unit of meaning, that is, the rules of its combinations with other elements of meaning.

In isolation from the space of meanings the individual meaning has no meaning, the meaning is the connections of the meaning tensor with other meaning tensors.

So, for example, a cat, is connected with the concepts: animal, go, motley, with a tail, meows. And so on.

And I'm not at all sure that there are only 300 or 1000 of these connections. My hypothesis: Most likely, the embeddings of the words need to be made much larger. *So that during training a neural network can itself determine these objects/objects/concepts/generalizations and reflect the concepts in this concept space.

Maybe one of the vectors would be a verb feature, the second a noun feature, and so on.

Hypothesis - much more attention should be paid to embeddings - because these are the incoming projections of the meaning tensors. The quality of the meaning transformation depends on their quality as well.

In general, the dimensionality of sense tensor components depends on the conceptual domain.

For example, in programming languages, the dimensionality is less; for example,

ifrefers only to a conditional expression and expects a comparison after itself that has the typeboolean.

Each concept has its own meaning tensor - its embedding. But depending on the context - the context itself has its own meaning tensor. And the word in the context can already have a very different meaning than in another context or without it.

So we don't talk about absolute meaning tensors of words or tokens, we always talk about meaning tensors of a certain context.

A single word in itself is also an example of a context. That context is the absence of context. As each next word is added, the context of the whole text/phrase changes, and the meaning tensor of that text/phrase changes as well.

GPT-4 response

The perspective you propose highlights the dynamic and context-dependent nature of meaning, which is a key aspect of natural language. When we communicate, the meaning of words and phrases is constantly shifting and adapting based on the surrounding context, a concept you're encapsulating with the notion of context-dependent 'meaning tensors'.

There are a few elements you're considering here:

Dimensionality of 'meaning tensors': You're suggesting that the dimensionality of these 'meaning tensors' might be much larger than the dimensions typically used for word embeddings, potentially encompassing many different types of semantic and syntactic information. This is an interesting idea, and indeed, there are already some models that use higher-dimensional embeddings. However, it's also worth noting that increasing the dimensionality of embeddings can make training more computationally intensive and may risk overfitting.

Context-dependence of 'meaning tensors': You're arguing that the 'meaning tensor' of a word or phrase is not fixed, but changes based on the surrounding context. This is a critical insight that has driven much of the progress in natural language processing over the past few years. For example, models like BERT and GPT are designed to generate context-dependent representations of words. While a 'meaning tensor' in your framework would likely be much more complex than the hidden states used in these models, the basic idea of capturing context-dependent meaning is similar.

Embeddings as 'incoming projections': You're suggesting that word embeddings can be thought of as 'incoming projections' of the 'meaning tensors', and that the quality of these embeddings is crucial for the quality of the subsequent transformations. This is another interesting idea. Current practice in NLP does indeed place a lot of importance on the quality of embeddings, and there's a lot of ongoing research into ways to improve them.

The general direction of your thoughts aligns well with many of the current trends in NLP research, including the move towards more dynamic, context-dependent representations of meaning and the focus on improving the quality of embeddings. The notion of 'meaning tensors' could potentially provide a new and powerful framework for thinking about these issues, and could inspire new techniques and approaches. However, as with any new theoretical framework, it would need to be thoroughly developed and tested to fully understand its implications and potential benefits.